Description

Our overhead measurement automation (#6611) is able to instrument an app, measure before and after Sentry, and Replay.

The problem we have is that the values from different runs are widely inconsistent. Without any code changes, the values differ a lot. This issue tracks some ideas to improve this.

Problems and possible reasons

- When small numbers show up (e.g: 0.001) as small difference can show a large percentage. We often see 1000%, on values that are not relevant (see screenshot below).

- GHA runner is a VM and shared resource and a single thread is allocated to each run. Running any type of benchmark in CI often has this issue of values being inconsistent.

- There might be missing a warm-up phase that JIT's things or loads things in memory, etc that are not counted towards the measurements.

- [ ] Make sure we can easily run the same thing locally (with numbers being compared) to make sure numbers are consistent

- [ ] If the runner is the culprit, use [GitHub actions large runners](https://docs.github.com/en/actions/using-github-hosted-runners/using-larger-runners)

- [ ] Once numbers are stable across runs, take another stab at the test app (https://github.com/getsentry/sentry-javascript/pull/7300)

Make it customer facing

We don't have though some metrics that we can report, that come out of a result of overhead measurement.

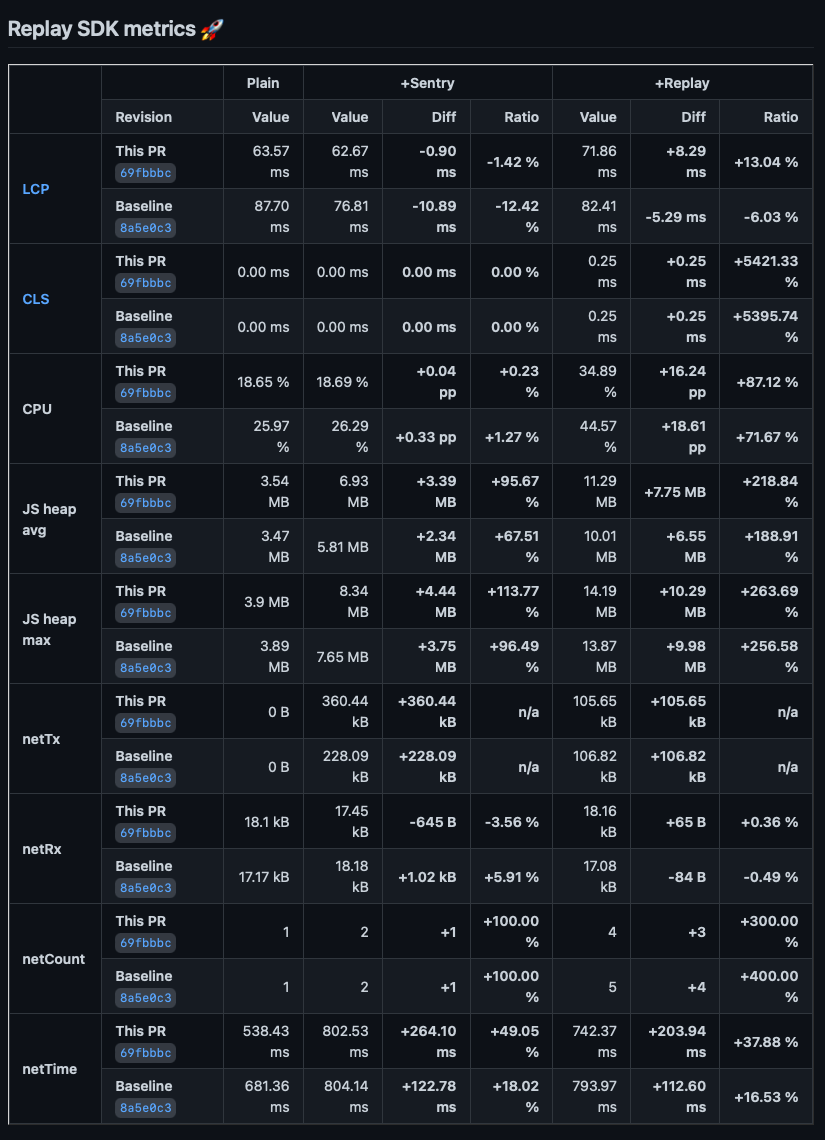

Our PRs have an automation that run some measurements and compare with base branch:

A new sample/test app was recently merged, that better represents a normal website:

This is useful for us to develop and verify impact of changes. But isn't something that on its current from can be easily added to our docs, on the overhead section referred above.

It also might need a bit of polishing, since showing 5000% increase might be surprising, even though once you look at why it is actually not a big deal:

The goal is to be able to point users to some numbers. Ideally something simple, and optionally link to a repo where they can see how things work and run it themselves.

- [ ] Surface on our docs the values of measurements of releases https://docs.sentry.io/product/session-replay/performance-overhead/