diff --git a/README.md b/README.md

index 8cf6bdd5..ac3efbed 100644

--- a/README.md

+++ b/README.md

@@ -34,14 +34,6 @@ Then add the plugin to your `netlify.toml` configuration file:

[[plugins]]

package = "@netlify/plugin-lighthouse"

- # optional, fails build when a category is below a threshold

- [plugins.inputs.thresholds]

- performance = 0.9

- accessibility = 0.9

- best-practices = 0.9

- seo = 0.9

- pwa = 0.9

-

# optional, deploy the lighthouse report to a path under your site

[plugins.inputs.audits]

output_path = "reports/lighthouse.html"

@@ -51,7 +43,7 @@ The lighthouse scores are automatically printed to the **Deploy log** in the Net

```

2:35:07 PM: ────────────────────────────────────────────────────────────────

-2:35:07 PM: 2. onPostBuild command from @netlify/plugin-lighthouse

+2:35:07 PM: @netlify/plugin-lighthouse (onSuccess event)

2:35:07 PM: ────────────────────────────────────────────────────────────────

2:35:07 PM:

2:35:07 PM: Serving and scanning site from directory dist

@@ -73,32 +65,25 @@ The lighthouse scores are automatically printed to the **Deploy log** in the Net

To customize how Lighthouse runs audits, you can make changes to the `netlify.toml` file.

-By default, the plugin will serve and audit the build directory of the site, inspecting the `index.html`.

-You can customize the behavior via the `audits` input:

+By default, the plugin will run after your build is deployed on the live deploy permalink, inspecting the home path `/`.

+You can add additional configuration and/or inspect a different path, or multiple additional paths by adding configuration in the `netlify.toml` file:

```toml

[[plugins]]

package = "@netlify/plugin-lighthouse"

+ # Set minimum thresholds for each report area

[plugins.inputs.thresholds]

performance = 0.9

- # to audit a sub path of the build directory

+ # to audit a path other than /

# route1 audit will use the top level thresholds

[[plugins.inputs.audits]]

path = "route1"

- # you can specify output_path per audit, relative to the path

+ # you can optionally specify an output_path per audit, relative to the path, where HTML report output will be saved

output_path = "reports/route1.html"

- # to audit an HTML file other than index.html in the build directory

- [[plugins.inputs.audits]]

- path = "contact.html"

-

- # to audit an HTML file other than index.html in a sub path of the build directory

- [[plugins.inputs.audits]]

- path = "pages/contact.html"

-

# to audit a specific absolute url

[[plugins.inputs.audits]]

url = "https://www.example.com"

@@ -107,11 +92,42 @@ You can customize the behavior via the `audits` input:

[plugins.inputs.audits.thresholds]

performance = 0.8

+```

+

+#### Fail a deploy based on score thresholds

+

+By default, the lighthouse plugin will run _after_ your deploy has been successful, auditing the live deploy content.

+

+To run the plugin _before_ the deploy is live, use the `fail_deploy_on_score_thresholds` input to instead run during the `onPostBuild` event.

+This will statically serve your build output folder, and audit the `index.html` (or other file if specified as below). Please note that sites or site paths using SSR/ISR (server-side rendering or Incremental Static Regeneration) cannot be served and audited in this way.

+

+Using this configuration, if minimum threshold scores are supplied and not met, the deploy will fail. Set the threshold based on `performance`, `accessibility`, `best-practices`, `seo`, or `pwa`.

+

+```toml

+[[plugins]]

+ package = "@netlify/plugin-lighthouse"

+

+ # Set the plugin to run prior to deploy, failing the build if minimum thresholds aren't set

+ [plugins.inputs]

+ fail_deploy_on_score_thresholds = "true"

+

+ # Set minimum thresholds for each report area

+ [plugins.inputs.thresholds]

+ performance = 0.9

+ accessibility: = 0.7

+

+ # to audit an HTML file other than index.html in the build directory

+ [[plugins.inputs.audits]]

+ path = "contact.html"

+

+ # to audit an HTML file other than index.html in a sub path of the build directory

+ [[plugins.inputs.audits]]

+ path = "pages/contact.html"

+

# to serve only a sub directory of the build directory for an audit

# pages/index.html will be audited, and files outside of this directory will not be served

[[plugins.inputs.audits]]

serveDir = "pages"

-

```

### Run Lighthouse audits for desktop

@@ -148,18 +164,6 @@ Updates to `netlify.toml` will take effect for new builds.

locale = "es" # generates Lighthouse reports in Español

```

-### Fail Builds Based on Score Thresholds

-

-By default, the Lighthouse plugin will report the findings in the deploy logs. To fail a build based on a specific score, specify the inputs thresholds in your `netlify.toml` file. Set the threshold based on `performance`, `accessibility`, `best-practices`, `seo`, or `pwa`.

-

-```toml

-[[plugins]]

- package = "@netlify/plugin-lighthouse"

-

- [plugins.inputs.thresholds]

- performance = 0.9

-```

-

### Run Lighthouse Locally

Fork and clone this repo.

@@ -173,21 +177,13 @@ yarn local

## Preview Lighthouse results within the Netlify UI

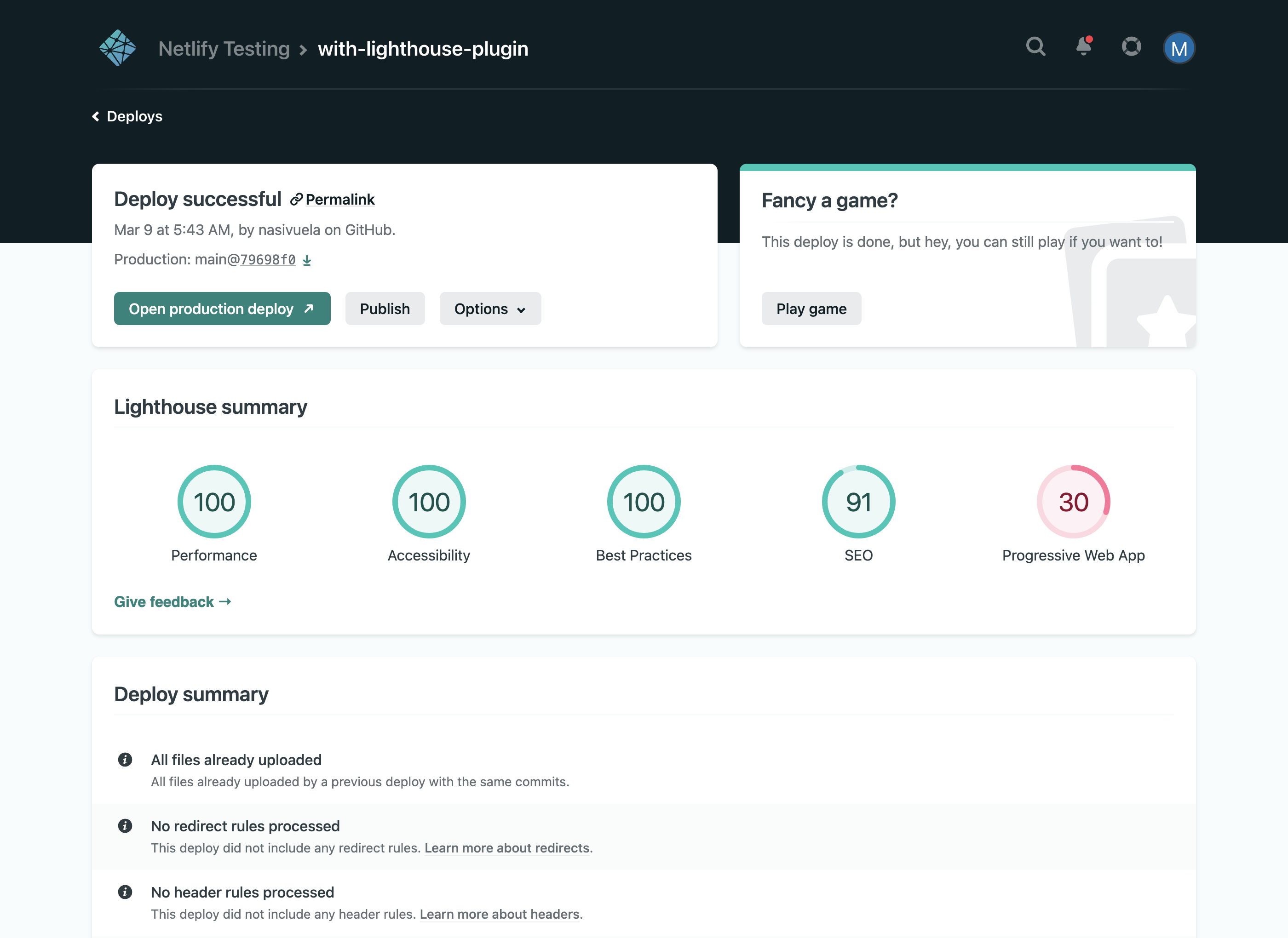

-Netlify offers an experimental feature through Netlify Labs that allows you to view Lighthouse scores for each of your builds on your site's Deploy Details page with a much richer format.

-

-You'll need to install the [Lighthouse build plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) on your site and then enable this experimental feature through Netlify Labs.

-

- -

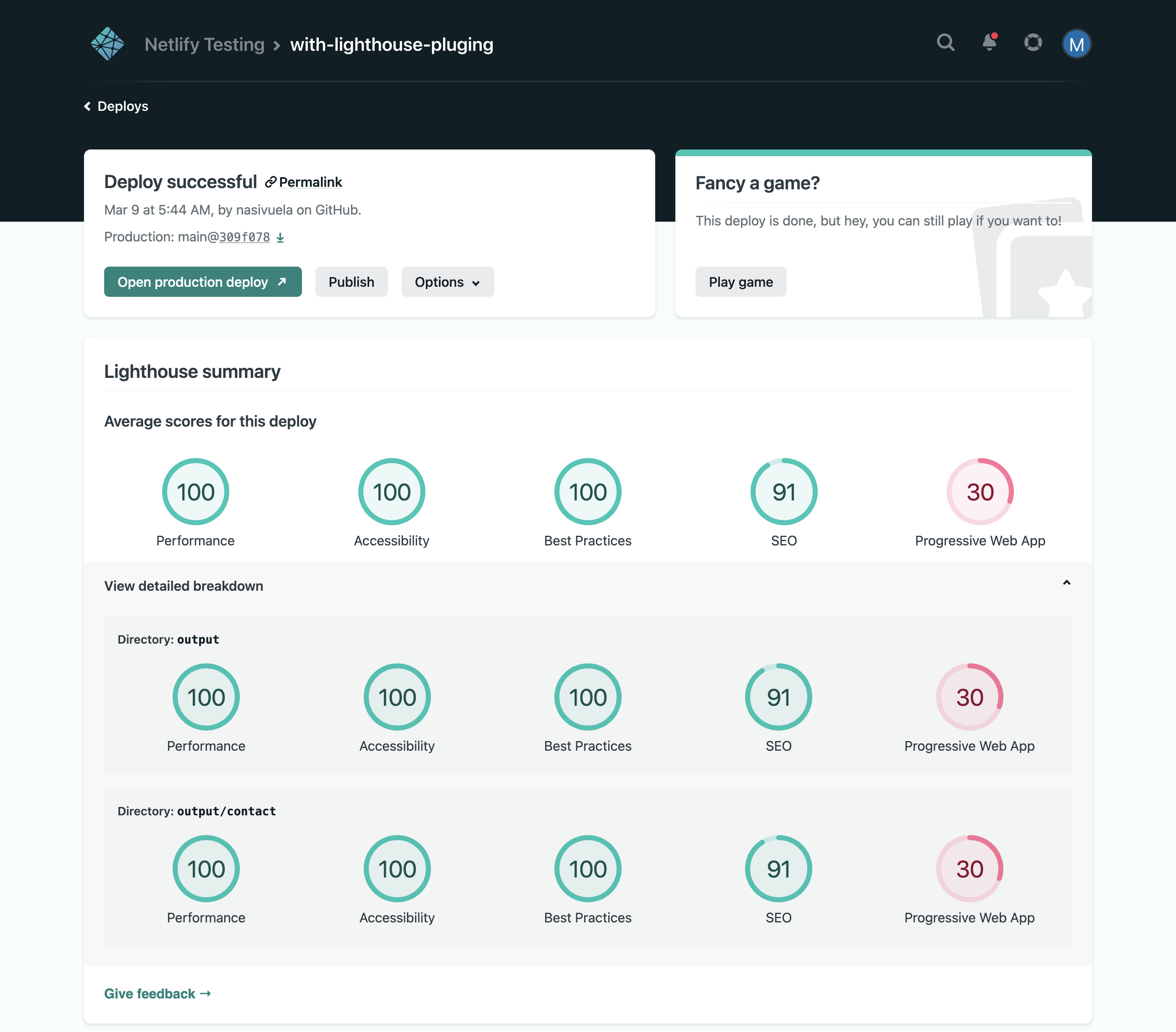

-If you have multiple audits (directories, paths, etc) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

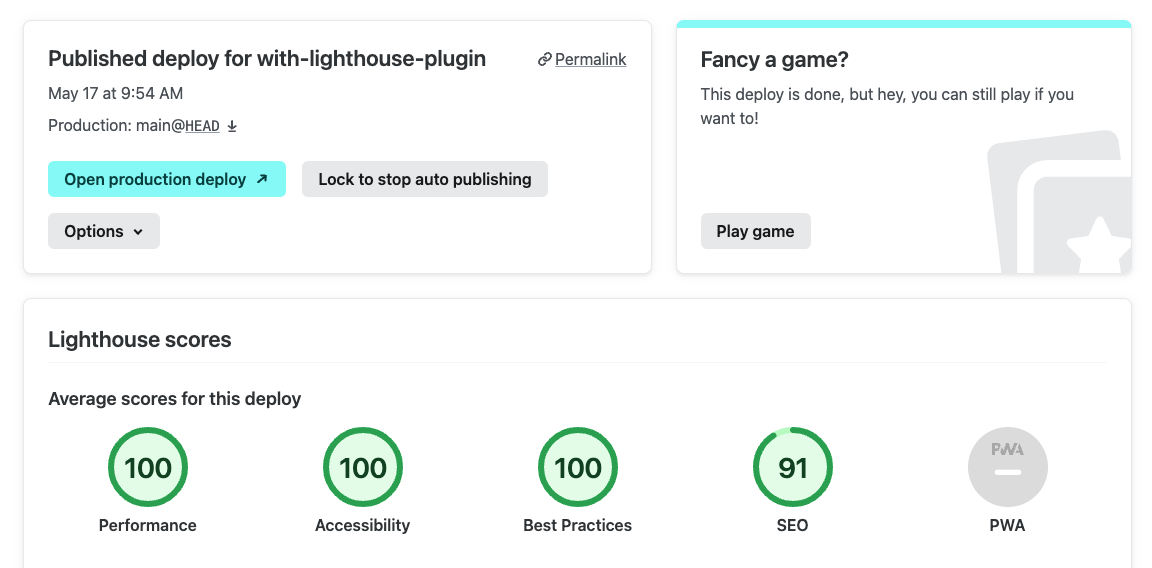

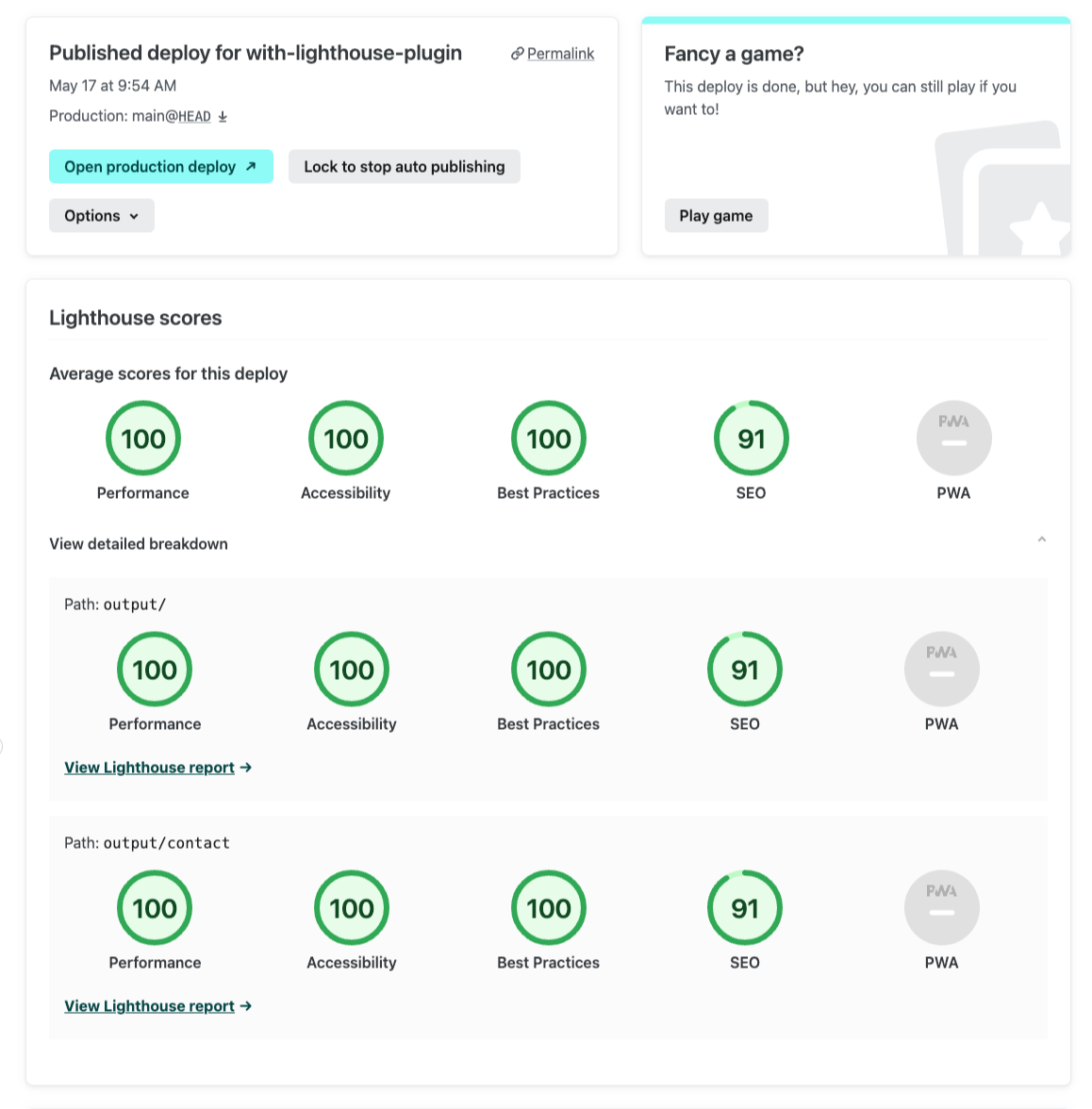

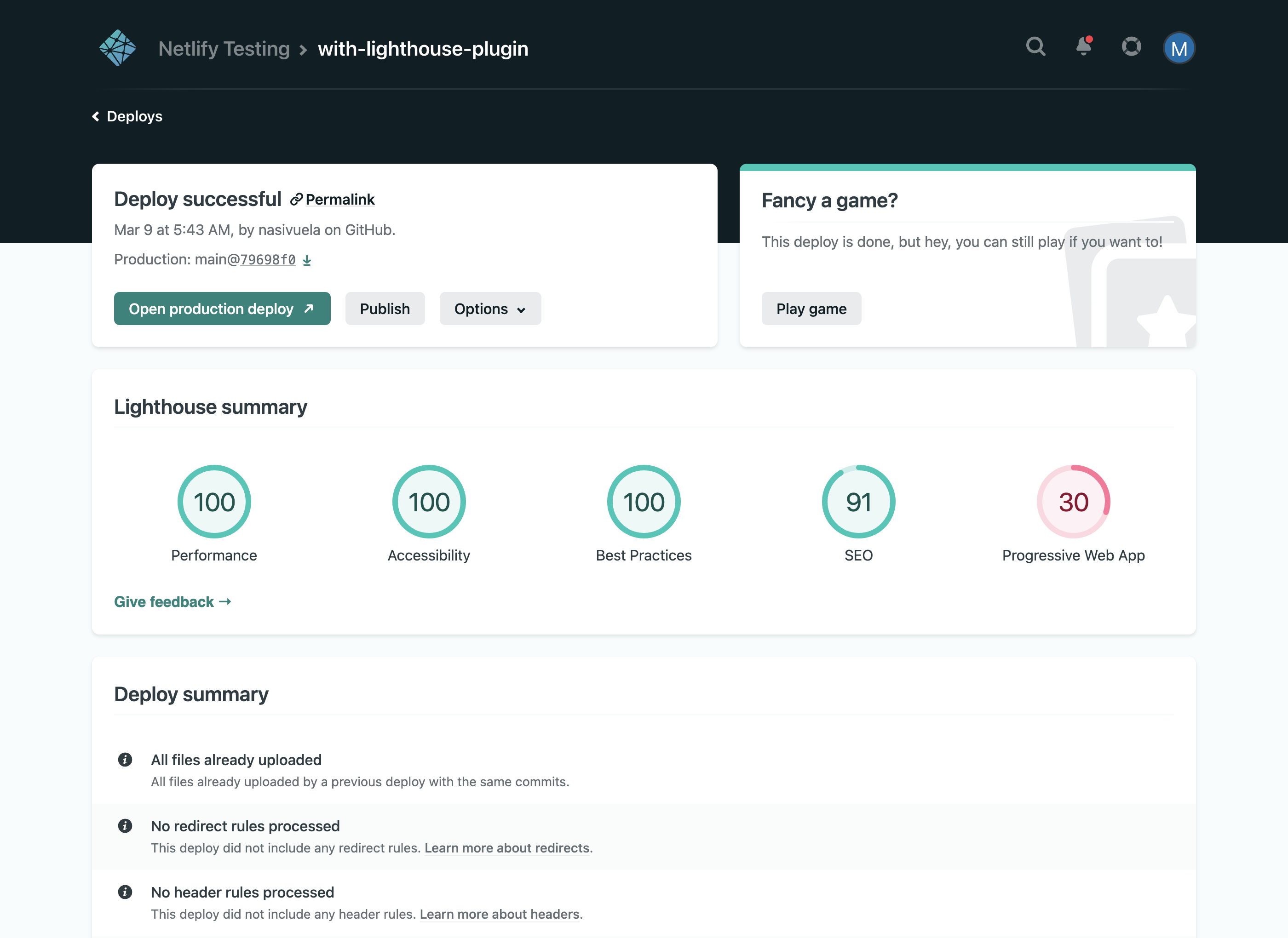

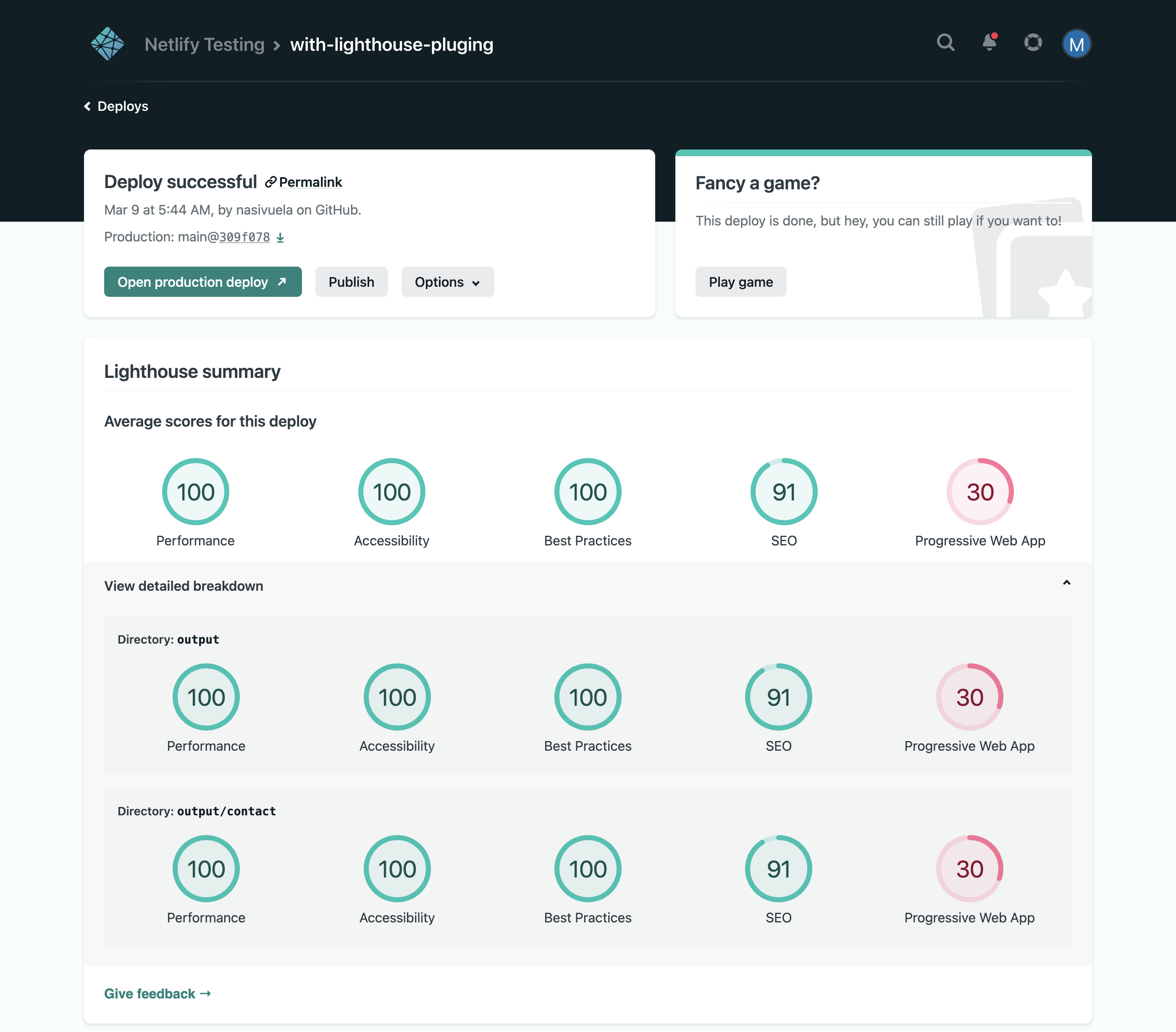

+The Netlify UI allows you to view Lighthouse scores for each of your builds on your site's Deploy Details page with a much richer format.

-

-

-If you have multiple audits (directories, paths, etc) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

+The Netlify UI allows you to view Lighthouse scores for each of your builds on your site's Deploy Details page with a much richer format.

- +You'll need to first install the [Lighthouse build plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) on your site.

-Some items of note:

+

+You'll need to first install the [Lighthouse build plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) on your site.

-Some items of note:

+ -- The [Lighthouse Build Plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) must be installed on your site(s) in order for these score visualizations to be displayed.

-- This Labs feature is currently only enabled at the user-level, so it will need to be enabled for each individual team member that wishes to see the Lighthouse scores displayed.

+If you have multiple audits (e.g. multiple paths) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

-Learn more in our official [Labs docs](https://docs.netlify.com/netlify-labs/experimental-features/lighthouse-visualization/).

+

-- The [Lighthouse Build Plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) must be installed on your site(s) in order for these score visualizations to be displayed.

-- This Labs feature is currently only enabled at the user-level, so it will need to be enabled for each individual team member that wishes to see the Lighthouse scores displayed.

+If you have multiple audits (e.g. multiple paths) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

-Learn more in our official [Labs docs](https://docs.netlify.com/netlify-labs/experimental-features/lighthouse-visualization/).

+ -We have a lot planned for this feature and will be adding functionality regularly, but we'd also love to hear your thoughts. Please [share your feedback](https://netlify.qualtrics.com/jfe/form/SV_1NTbTSpvEi0UzWe) about this experimental feature and tell us what you think.

diff --git a/manifest.yml b/manifest.yml

index cd0e27ba..05fe5979 100644

--- a/manifest.yml

+++ b/manifest.yml

@@ -21,6 +21,6 @@ inputs:

required: false

description: Lighthouse-specific settings, used to modify reporting criteria

- - name: run_on_success

+ - name: fail_deploy_on_score_thresholds

required: false

- description: (Beta) Run Lighthouse against the deployed site

+ description: Fail deploy if minimum threshold scores are not met

diff --git a/netlify.toml b/netlify.toml

index 44ba2ae5..0e80ea55 100644

--- a/netlify.toml

+++ b/netlify.toml

@@ -11,6 +11,9 @@ package = "./src/index.js"

[plugins.inputs]

output_path = "reports/lighthouse.html"

+# Note: Required for our Cypress smoke tests

+fail_deploy_on_score_thresholds = "true"

+

[plugins.inputs.thresholds]

performance = 0.9

diff --git a/package-lock.json b/package-lock.json

index 24e28c9e..4bacc9e4 100644

--- a/package-lock.json

+++ b/package-lock.json

@@ -6,7 +6,7 @@

"packages": {

"": {

"name": "@netlify/plugin-lighthouse",

- "version": "4.0.7",

+ "version": "4.1.1",

"license": "MIT",

"dependencies": {

"chalk": "^4.1.0",

diff --git a/package.json b/package.json

index a498a729..acc8c018 100644

--- a/package.json

+++ b/package.json

@@ -4,8 +4,8 @@

"description": "Netlify Plugin to run Lighthouse on each build",

"main": "src/index.js",

"scripts": {

- "local": "node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onPostBuild());'",

- "local-onsuccess": "LIGHTHOUSE_RUN_ON_SUCCESS=true node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onSuccess());'",

+ "local": "node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onSuccess());'",

+ "local-onpostbuild": "node -e 'import(\"./src/index.js\").then(index => index.default({fail_deploy_on_score_thresholds: \"true\"})).then(events => events.onPostBuild());'",

"lint": "eslint 'src/**/*.js'",

"format": "prettier --write 'src/**/*.js'",

"test": "node --experimental-vm-modules node_modules/jest/bin/jest.js --collect-coverage --maxWorkers=1",

diff --git a/src/e2e/fail-threshold-onpostbuild.test.js b/src/e2e/fail-threshold-onpostbuild.test.js

index 0db73891..fe1e357b 100644

--- a/src/e2e/fail-threshold-onpostbuild.test.js

+++ b/src/e2e/fail-threshold-onpostbuild.test.js

@@ -45,12 +45,16 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

it('should not output expected success payload', async () => {

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(mockUtils.status.show).not.toHaveBeenCalledWith();

});

@@ -67,7 +71,9 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

" 'Manifest doesn't have a maskable icon' received a score of 0",

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

const resultError = console.error.mock.calls[0][0];

expect(stripAnsi(resultError).split('\n').filter(Boolean)).toEqual(error);

});

@@ -99,7 +105,9 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

],

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

const [resultMessage, resultPayload] =

mockUtils.build.failBuild.mock.calls[0];

diff --git a/src/e2e/fail-threshold-onsuccess.test.js b/src/e2e/fail-threshold-onsuccess.test.js

index 119e1db7..0d57c93c 100644

--- a/src/e2e/fail-threshold-onsuccess.test.js

+++ b/src/e2e/fail-threshold-onsuccess.test.js

@@ -22,7 +22,6 @@ describe('lighthousePlugin with failed threshold run (onSuccess)', () => {

beforeEach(() => {

resetEnv();

jest.clearAllMocks();

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

process.env.DEPLOY_URL = 'https://www.netlify.com';

process.env.THRESHOLDS = JSON.stringify({

performance: 1,

diff --git a/src/e2e/lib/reset-env.js b/src/e2e/lib/reset-env.js

index a6b817d6..4b6eb81c 100644

--- a/src/e2e/lib/reset-env.js

+++ b/src/e2e/lib/reset-env.js

@@ -1,7 +1,6 @@

const resetEnv = () => {

delete process.env.OUTPUT_PATH;

delete process.env.PUBLISH_DIR;

- delete process.env.RUN_ON_SUCCESS;

delete process.env.SETTINGS;

delete process.env.THRESHOLDS;

delete process.env.URL;

diff --git a/src/e2e/not-found-onpostbuild.test.js b/src/e2e/not-found-onpostbuild.test.js

index 56403d76..a48ac1a6 100644

--- a/src/e2e/not-found-onpostbuild.test.js

+++ b/src/e2e/not-found-onpostbuild.test.js

@@ -32,7 +32,9 @@ describe('lighthousePlugin with single not-found run (onPostBuild)', () => {

'Lighthouse was unable to reliably load the page you requested. Make sure you are testing the correct URL and that the server is properly responding to all requests. (Status code: 404)',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -52,14 +54,18 @@ describe('lighthousePlugin with single not-found run (onPostBuild)', () => {

"Error testing 'example/this-page-does-not-exist': Lighthouse was unable to reliably load the page you requested. Make sure you are testing the correct URL and that the server is properly responding to all requests. (Status code: 404)",

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(mockUtils.status.show).toHaveBeenCalledWith(payload);

});

it('should not output errors, or call fail events', async () => {

mockConsoleError();

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(console.error).not.toHaveBeenCalled();

expect(mockUtils.build.failBuild).not.toHaveBeenCalled();

expect(mockUtils.build.failPlugin).not.toHaveBeenCalled();

diff --git a/src/e2e/not-found-onsuccess.test.js b/src/e2e/not-found-onsuccess.test.js

index 824f132d..99640ba4 100644

--- a/src/e2e/not-found-onsuccess.test.js

+++ b/src/e2e/not-found-onsuccess.test.js

@@ -19,7 +19,6 @@ describe('lighthousePlugin with single not-found run (onSuccess)', () => {

beforeEach(() => {

resetEnv();

jest.clearAllMocks();

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

process.env.DEPLOY_URL = 'https://www.netlify.com';

process.env.AUDITS = JSON.stringify([{ path: 'this-page-does-not-exist' }]);

});

diff --git a/src/e2e/settings-locale.test.js b/src/e2e/settings-locale.test.js

index 6f4b4c22..41d60d9c 100644

--- a/src/e2e/settings-locale.test.js

+++ b/src/e2e/settings-locale.test.js

@@ -30,14 +30,14 @@ describe('lighthousePlugin with custom locale', () => {

jest.clearAllMocks();

process.env.PUBLISH_DIR = 'example';

process.env.SETTINGS = JSON.stringify({ locale: 'es' });

+ process.env.DEPLOY_URL = 'https://www.netlify.com';

});

it('should output expected log content', async () => {

const logs = [

'Generating Lighthouse report. This may take a minute…',

- 'Running Lighthouse on example/ using the “es” locale',

- 'Serving and scanning site from directory example',

- 'Lighthouse scores for example/',

+ 'Running Lighthouse on / using the “es” locale',

+ 'Lighthouse scores for /',

'- Rendimiento: 100',

'- Accesibilidad: 100',

'- Prácticas recomendadas: 100',

@@ -45,7 +45,7 @@ describe('lighthousePlugin with custom locale', () => {

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -58,7 +58,7 @@ describe('lighthousePlugin with custom locale', () => {

installable: false,

locale: 'es',

},

- path: 'example/',

+ path: '/',

report: '

-We have a lot planned for this feature and will be adding functionality regularly, but we'd also love to hear your thoughts. Please [share your feedback](https://netlify.qualtrics.com/jfe/form/SV_1NTbTSpvEi0UzWe) about this experimental feature and tell us what you think.

diff --git a/manifest.yml b/manifest.yml

index cd0e27ba..05fe5979 100644

--- a/manifest.yml

+++ b/manifest.yml

@@ -21,6 +21,6 @@ inputs:

required: false

description: Lighthouse-specific settings, used to modify reporting criteria

- - name: run_on_success

+ - name: fail_deploy_on_score_thresholds

required: false

- description: (Beta) Run Lighthouse against the deployed site

+ description: Fail deploy if minimum threshold scores are not met

diff --git a/netlify.toml b/netlify.toml

index 44ba2ae5..0e80ea55 100644

--- a/netlify.toml

+++ b/netlify.toml

@@ -11,6 +11,9 @@ package = "./src/index.js"

[plugins.inputs]

output_path = "reports/lighthouse.html"

+# Note: Required for our Cypress smoke tests

+fail_deploy_on_score_thresholds = "true"

+

[plugins.inputs.thresholds]

performance = 0.9

diff --git a/package-lock.json b/package-lock.json

index 24e28c9e..4bacc9e4 100644

--- a/package-lock.json

+++ b/package-lock.json

@@ -6,7 +6,7 @@

"packages": {

"": {

"name": "@netlify/plugin-lighthouse",

- "version": "4.0.7",

+ "version": "4.1.1",

"license": "MIT",

"dependencies": {

"chalk": "^4.1.0",

diff --git a/package.json b/package.json

index a498a729..acc8c018 100644

--- a/package.json

+++ b/package.json

@@ -4,8 +4,8 @@

"description": "Netlify Plugin to run Lighthouse on each build",

"main": "src/index.js",

"scripts": {

- "local": "node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onPostBuild());'",

- "local-onsuccess": "LIGHTHOUSE_RUN_ON_SUCCESS=true node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onSuccess());'",

+ "local": "node -e 'import(\"./src/index.js\").then(index => index.default()).then(events => events.onSuccess());'",

+ "local-onpostbuild": "node -e 'import(\"./src/index.js\").then(index => index.default({fail_deploy_on_score_thresholds: \"true\"})).then(events => events.onPostBuild());'",

"lint": "eslint 'src/**/*.js'",

"format": "prettier --write 'src/**/*.js'",

"test": "node --experimental-vm-modules node_modules/jest/bin/jest.js --collect-coverage --maxWorkers=1",

diff --git a/src/e2e/fail-threshold-onpostbuild.test.js b/src/e2e/fail-threshold-onpostbuild.test.js

index 0db73891..fe1e357b 100644

--- a/src/e2e/fail-threshold-onpostbuild.test.js

+++ b/src/e2e/fail-threshold-onpostbuild.test.js

@@ -45,12 +45,16 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

it('should not output expected success payload', async () => {

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(mockUtils.status.show).not.toHaveBeenCalledWith();

});

@@ -67,7 +71,9 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

" 'Manifest doesn't have a maskable icon' received a score of 0",

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

const resultError = console.error.mock.calls[0][0];

expect(stripAnsi(resultError).split('\n').filter(Boolean)).toEqual(error);

});

@@ -99,7 +105,9 @@ describe('lighthousePlugin with failed threshold run (onPostBuild)', () => {

],

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

const [resultMessage, resultPayload] =

mockUtils.build.failBuild.mock.calls[0];

diff --git a/src/e2e/fail-threshold-onsuccess.test.js b/src/e2e/fail-threshold-onsuccess.test.js

index 119e1db7..0d57c93c 100644

--- a/src/e2e/fail-threshold-onsuccess.test.js

+++ b/src/e2e/fail-threshold-onsuccess.test.js

@@ -22,7 +22,6 @@ describe('lighthousePlugin with failed threshold run (onSuccess)', () => {

beforeEach(() => {

resetEnv();

jest.clearAllMocks();

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

process.env.DEPLOY_URL = 'https://www.netlify.com';

process.env.THRESHOLDS = JSON.stringify({

performance: 1,

diff --git a/src/e2e/lib/reset-env.js b/src/e2e/lib/reset-env.js

index a6b817d6..4b6eb81c 100644

--- a/src/e2e/lib/reset-env.js

+++ b/src/e2e/lib/reset-env.js

@@ -1,7 +1,6 @@

const resetEnv = () => {

delete process.env.OUTPUT_PATH;

delete process.env.PUBLISH_DIR;

- delete process.env.RUN_ON_SUCCESS;

delete process.env.SETTINGS;

delete process.env.THRESHOLDS;

delete process.env.URL;

diff --git a/src/e2e/not-found-onpostbuild.test.js b/src/e2e/not-found-onpostbuild.test.js

index 56403d76..a48ac1a6 100644

--- a/src/e2e/not-found-onpostbuild.test.js

+++ b/src/e2e/not-found-onpostbuild.test.js

@@ -32,7 +32,9 @@ describe('lighthousePlugin with single not-found run (onPostBuild)', () => {

'Lighthouse was unable to reliably load the page you requested. Make sure you are testing the correct URL and that the server is properly responding to all requests. (Status code: 404)',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -52,14 +54,18 @@ describe('lighthousePlugin with single not-found run (onPostBuild)', () => {

"Error testing 'example/this-page-does-not-exist': Lighthouse was unable to reliably load the page you requested. Make sure you are testing the correct URL and that the server is properly responding to all requests. (Status code: 404)",

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(mockUtils.status.show).toHaveBeenCalledWith(payload);

});

it('should not output errors, or call fail events', async () => {

mockConsoleError();

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(console.error).not.toHaveBeenCalled();

expect(mockUtils.build.failBuild).not.toHaveBeenCalled();

expect(mockUtils.build.failPlugin).not.toHaveBeenCalled();

diff --git a/src/e2e/not-found-onsuccess.test.js b/src/e2e/not-found-onsuccess.test.js

index 824f132d..99640ba4 100644

--- a/src/e2e/not-found-onsuccess.test.js

+++ b/src/e2e/not-found-onsuccess.test.js

@@ -19,7 +19,6 @@ describe('lighthousePlugin with single not-found run (onSuccess)', () => {

beforeEach(() => {

resetEnv();

jest.clearAllMocks();

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

process.env.DEPLOY_URL = 'https://www.netlify.com';

process.env.AUDITS = JSON.stringify([{ path: 'this-page-does-not-exist' }]);

});

diff --git a/src/e2e/settings-locale.test.js b/src/e2e/settings-locale.test.js

index 6f4b4c22..41d60d9c 100644

--- a/src/e2e/settings-locale.test.js

+++ b/src/e2e/settings-locale.test.js

@@ -30,14 +30,14 @@ describe('lighthousePlugin with custom locale', () => {

jest.clearAllMocks();

process.env.PUBLISH_DIR = 'example';

process.env.SETTINGS = JSON.stringify({ locale: 'es' });

+ process.env.DEPLOY_URL = 'https://www.netlify.com';

});

it('should output expected log content', async () => {

const logs = [

'Generating Lighthouse report. This may take a minute…',

- 'Running Lighthouse on example/ using the “es” locale',

- 'Serving and scanning site from directory example',

- 'Lighthouse scores for example/',

+ 'Running Lighthouse on / using the “es” locale',

+ 'Lighthouse scores for /',

'- Rendimiento: 100',

'- Accesibilidad: 100',

'- Prácticas recomendadas: 100',

@@ -45,7 +45,7 @@ describe('lighthousePlugin with custom locale', () => {

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -58,7 +58,7 @@ describe('lighthousePlugin with custom locale', () => {

installable: false,

locale: 'es',

},

- path: 'example/',

+ path: '/',

report: 'Lighthouse Report (mock)

',

summary: {

accessibility: 100,

@@ -70,17 +70,17 @@ describe('lighthousePlugin with custom locale', () => {

},

],

summary:

- "Summary for path 'example/': Rendimiento: 100, Accesibilidad: 100, Prácticas recomendadas: 100, SEO: 91, PWA: 30",

+ "Summary for path '/': Rendimiento: 100, Accesibilidad: 100, Prácticas recomendadas: 100, SEO: 91, PWA: 30",

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(mockUtils.status.show).toHaveBeenCalledWith(payload);

});

it('should not output errors, or call fail events', async () => {

mockConsoleError();

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(console.error).not.toHaveBeenCalled();

expect(mockUtils.build.failBuild).not.toHaveBeenCalled();

expect(mockUtils.build.failPlugin).not.toHaveBeenCalled();

diff --git a/src/e2e/settings-preset.test.js b/src/e2e/settings-preset.test.js

index a9252087..a22c1788 100644

--- a/src/e2e/settings-preset.test.js

+++ b/src/e2e/settings-preset.test.js

@@ -24,21 +24,21 @@ describe('lighthousePlugin with custom device preset', () => {

jest.clearAllMocks();

process.env.PUBLISH_DIR = 'example';

process.env.SETTINGS = JSON.stringify({ preset: 'desktop' });

+ process.env.DEPLOY_URL = 'https://www.netlify.com';

});

it('should output expected log content', async () => {

const logs = [

'Generating Lighthouse report. This may take a minute…',

- 'Running Lighthouse on example/ using the “desktop” preset',

- 'Serving and scanning site from directory example',

- 'Lighthouse scores for example/',

+ 'Running Lighthouse on / using the “desktop” preset',

+ 'Lighthouse scores for /',

'- Performance: 100',

'- Accessibility: 100',

'- Best Practices: 100',

'- SEO: 91',

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -51,7 +51,7 @@ describe('lighthousePlugin with custom device preset', () => {

installable: false,

locale: 'en-US',

},

- path: 'example/',

+ path: '/',

report: 'Lighthouse Report (mock)

',

summary: {

accessibility: 100,

@@ -63,17 +63,17 @@ describe('lighthousePlugin with custom device preset', () => {

},

],

summary:

- "Summary for path 'example/': Performance: 100, Accessibility: 100, Best Practices: 100, SEO: 91, PWA: 30",

+ "Summary for path '/': Performance: 100, Accessibility: 100, Best Practices: 100, SEO: 91, PWA: 30",

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(mockUtils.status.show).toHaveBeenCalledWith(payload);

});

it('should not output errors, or call fail events', async () => {

mockConsoleError();

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin().onSuccess({ utils: mockUtils });

expect(console.error).not.toHaveBeenCalled();

expect(mockUtils.build.failBuild).not.toHaveBeenCalled();

expect(mockUtils.build.failPlugin).not.toHaveBeenCalled();

diff --git a/src/e2e/success-onpostbuild.test.js b/src/e2e/success-onpostbuild.test.js

index 2917ba5a..c612546b 100644

--- a/src/e2e/success-onpostbuild.test.js

+++ b/src/e2e/success-onpostbuild.test.js

@@ -34,7 +34,9 @@ describe('lighthousePlugin with single report per run (onPostBuild)', () => {

'- SEO: 91',

'- PWA: 30',

];

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(formatMockLog(console.log.mock.calls)).toEqual(logs);

});

@@ -62,14 +64,18 @@ describe('lighthousePlugin with single report per run (onPostBuild)', () => {

"Summary for path 'example/': Performance: 100, Accessibility: 100, Best Practices: 100, SEO: 91, PWA: 30",

};

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(mockUtils.status.show).toHaveBeenCalledWith(payload);

});

it('should not output errors, or call fail events', async () => {

mockConsoleError();

- await lighthousePlugin().onPostBuild({ utils: mockUtils });

+ await lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ }).onPostBuild({ utils: mockUtils });

expect(console.error).not.toHaveBeenCalled();

expect(mockUtils.build.failBuild).not.toHaveBeenCalled();

expect(mockUtils.build.failPlugin).not.toHaveBeenCalled();

diff --git a/src/e2e/success-onsuccess.test.js b/src/e2e/success-onsuccess.test.js

index e396e3f3..bee74c2d 100644

--- a/src/e2e/success-onsuccess.test.js

+++ b/src/e2e/success-onsuccess.test.js

@@ -19,7 +19,6 @@ describe('lighthousePlugin with single report per run (onSuccess)', () => {

beforeEach(() => {

resetEnv();

jest.clearAllMocks();

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

process.env.DEPLOY_URL = 'https://www.netlify.com';

});

diff --git a/src/index.js b/src/index.js

index e1bbfa96..0cd4b329 100644

--- a/src/index.js

+++ b/src/index.js

@@ -6,12 +6,11 @@ import getUtils from './lib/get-utils/index.js';

dotenv.config();

export default function lighthousePlugin(inputs) {

- // Run onPostBuild by default, unless LIGHTHOUSE_RUN_ON_SUCCESS env var is set to true, or run_on_success is specified in plugin inputs

+ // Run onSuccess by default, unless inputs specify we should fail_deploy_on_score_thresholds

const defaultEvent =

- inputs?.run_on_success === 'true' ||

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS === 'true'

- ? 'onSuccess'

- : 'onPostBuild';

+ inputs?.fail_deploy_on_score_thresholds === 'true'

+ ? 'onPostBuild'

+ : 'onSuccess';

if (defaultEvent === 'onSuccess') {

return {

diff --git a/src/index.test.js b/src/index.test.js

index 8162cb54..e814b296 100644

--- a/src/index.test.js

+++ b/src/index.test.js

@@ -3,7 +3,9 @@ import lighthousePlugin from './index.js';

describe('lighthousePlugin plugin events', () => {

describe('onPostBuild', () => {

it('should return only the expected event function', async () => {

- const events = lighthousePlugin();

+ const events = lighthousePlugin({

+ fail_deploy_on_score_thresholds: 'true',

+ });

expect(events).toEqual({

onPostBuild: expect.any(Function),

});

@@ -11,12 +13,6 @@ describe('lighthousePlugin plugin events', () => {

});

describe('onSuccess', () => {

- beforeEach(() => {

- process.env.LIGHTHOUSE_RUN_ON_SUCCESS = 'true';

- });

- afterEach(() => {

- delete process.env.LIGHTHOUSE_RUN_ON_SUCCESS;

- });

it('should return only the expected event function', async () => {

const events = lighthousePlugin();

expect(events).toEqual({

-

-If you have multiple audits (directories, paths, etc) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

+The Netlify UI allows you to view Lighthouse scores for each of your builds on your site's Deploy Details page with a much richer format.

-

-

-If you have multiple audits (directories, paths, etc) defined in your build, we will display a roll-up of the average Lighthouse scores for all the current build's audits plus the results for each individual audit.

+The Netlify UI allows you to view Lighthouse scores for each of your builds on your site's Deploy Details page with a much richer format.

- +You'll need to first install the [Lighthouse build plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) on your site.

-Some items of note:

+

+You'll need to first install the [Lighthouse build plugin](https://app.netlify.com/plugins/@netlify/plugin-lighthouse/install) on your site.

-Some items of note:

+